Difference between revisions of "4U VMware cluster"

(→Interconnects) |

|||

| Line 70: | Line 70: | ||

[[File:cluster_layout.png]] | [[File:cluster_layout.png]] | ||

| + | |||

| + | == ZFS stogare terminology == | ||

| + | |||

| + | The ZFS file system is an advanced modern file system used when data has to be secure, fast available and efficient. There are a number of things that can speed up data access that ZFS uses in terms of caching. First of all it tries to use RAM as a cache. This is called L1ARC (level 1 adaptive replacement cache). RAM memory is the fastest available storage in the computer, and the ZFS system will try to use this to speed up access. If some portion of data is accessed a lot, it places this in RAM to be extremely fast. If data is not cached in RAM, it has to revert to the storage disks. The L2ARC places a layer between these, and can contain very fast storage disks compared to the storage disks, but slower than the RAM. This used to be very fast SCSI disks, but nowadays SSD drives are preffered due to the very low access latency. | ||

| + | |||

| + | If data has to be stored onto a ZFS system, basically is has to write to the disks and will be bound to the speed of those disks. The ZIL or "ZFS intent log" can speed up this by caching the write transactions onto the cache. Often this is a RAM disk, or an SSD drive. If the ZIL is full, the ZFS system commits this data to the storage disks. | ||

Revision as of 15:26, 5 August 2014

| Project: 4U VMware cluster | |

|---|---|

| Featured: | |

| State | Active |

| Members | Danny Witberg |

| GitHub | No GitHub project defined. Add your project here. |

| Description | Make a mini VMware cluster |

| Picture | |

| No project picture! Fill in form Picture or Upload a jpeg here | |

Introduction

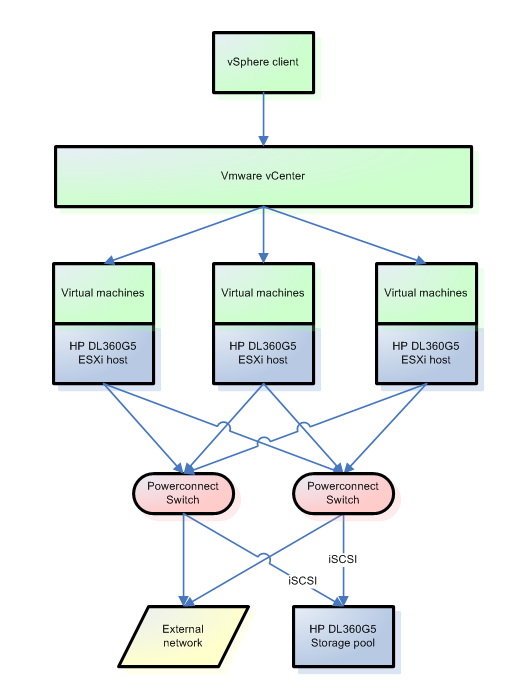

VMWare and virtual computing in general had many benefits over a physical server farm. One VMWare host can accomodate multiple virtual servers. Shared resources means better use of the actual hardware. In a cluster of hosts, several automation processes can be achieved such as auto restart upon failed VM, and automatic recovery after a failed host. To experiment with such a system, you will have to have a minimum of 3 host servers, and a shared storage system or datapool. My goal with this project is to set up the hardware in a mini 4U cluster system.

The hardware

The cluster is comprised of 4 HP DL360G5 1U servers. They are fairly cheap to come by, can easily be upgraded and are compact. This is an overview of the 4 systems:

1) DONE

- Hardware platform: HP DL360 G5

- CPU: 1x Quadcore Xeon E5420 2.5GHz 64 bit 6MB cache

- Memory: 20GB PC2-5300F ECC Fully buffered memory

- Harddisk: none installed

- Network: 2xBCM5705 gigabit Ethernet with offload engine + 2x dual gigabit Intel PRO1000PT

- Power: 2x 700W hotswap power supply

- Optical drive: DVD/CD rewriter

- Management port: ILO2 100Mbit Ethernet

- Boot disk: 32GB USB drive

2) DONE

- Hardware platform: HP DL360 G5

- CPU: 1x Quadcore Xeon E5420 2.5GHz 64 bit 6MB cache

- Memory: 20GB PC2-5300F ECC Fully buffered memory

- Harddisk: none installed

- Network: 2xBCM5705 gigabit Ethernet with offload engine + 2x dual gigabit Intel PRO1000PT

- Power: 2x 700W hotswap power supply

- Optical drive: DVD/CD rewriter

- Management port: ILO2 100Mbit Ethernet

- Boot disk: Todo: 32GB USB stick

3) Pending...

- Hardware platform: HP DL360 G5 DONE

- CPU: 1x Quadcore Xeon E5420 2.5GHz 64 bit 6MB cache DONE

- Memory: 20GB PC2-5300F ECC Fully buffered memory DONE

- Network: 2xBCM5705 gigabit Ethernet with offload engine + 2x dual gigabit Intel PRO1000PT DONE

- Power: 2x 700W hotswap power supply DONE

- Harddisk: none

- Optical drive: ??? T.B.D.

- Management port: ILO2 100Mbit Ethernet

- Boot disk: Todo: 32GB USB stick

4) Pending...

- Hardware platform: HP DL360 G5 DONE

- CPU: 1x Quadcore Xeon E5420 2.5GHz 64 bit 6MB cache DONE

- Memory: 16GB PC2-5300F ECC Fully buffered memory DONE

- Network: 2xBCM5705 gigabit Ethernet with offload engine + 2x dual gigabit Intel PRO1000PT DONE

- Harddisk: 3-5x SATA 500-1000GB 7200RPM 2.5inch drive + 256GB SSD <-- Still open for suggestions

- Power: 2x 700W hotswap power supply DONE

- Optical drive: ??? T.B.D.

- Management port: ILO2 100Mbit Ethernet

Upgrades

Upgrades: Memory upgrades can be from 4x4GB+4x1GB = 20GB to a configuration of 8x4GB=32GB. CPU upgrade can be to a dual quadcore system. With purchase of a single E5405 CPU, all three VMWare systems can already be equipped with 4xE5420 and 2xE5405 quadcores. I believe it's best to keep the storage server on a fast dual core CPU, like 3,7GHz, because the file server process is mostly single thread. For now it will run on a 2.5GHz quadcore. 16GB should be plenty to run a good ZFS system to host all VM data storage with iSCSI connections.

Interconnects

All four systems are planned with 6x gigabit ethernet hooked up to 2 Dell Powerconnect 5324 switches. Two of the gigabit can be used for the iSCSI connection, two for the VM's connection, and two for the shared vMotion/management interface. The host OS for the iSCSI server is still undetermined, but it has to support ZFS with ZIL and L2ARC capabilities at good speeds. Hopefully an SSD drive will be a positive influence to the fileserver's speed.

ZFS stogare terminology

The ZFS file system is an advanced modern file system used when data has to be secure, fast available and efficient. There are a number of things that can speed up data access that ZFS uses in terms of caching. First of all it tries to use RAM as a cache. This is called L1ARC (level 1 adaptive replacement cache). RAM memory is the fastest available storage in the computer, and the ZFS system will try to use this to speed up access. If some portion of data is accessed a lot, it places this in RAM to be extremely fast. If data is not cached in RAM, it has to revert to the storage disks. The L2ARC places a layer between these, and can contain very fast storage disks compared to the storage disks, but slower than the RAM. This used to be very fast SCSI disks, but nowadays SSD drives are preffered due to the very low access latency.

If data has to be stored onto a ZFS system, basically is has to write to the disks and will be bound to the speed of those disks. The ZIL or "ZFS intent log" can speed up this by caching the write transactions onto the cache. Often this is a RAM disk, or an SSD drive. If the ZIL is full, the ZFS system commits this data to the storage disks.